The AI in Conversational AI : Probing

In my last blog I mentioned that one of the ways a good Conversational AI approach generates depth of insight is through the use of appropriate probing. Good probing leads to more detailed verbatim response from participants, resulting in better insight.

However, not all Conversational AI approaches probing in the same way and the degree of ‘intelligence’ in the AI differs. This week, I thought I’d illustrate how inca approaches probing.

There are two ways in which the inca AI works for probing:

a) smart probing

b) targeted probing

Note that these two types of probes are in addition to user defined probing, which doesn’t actually use any AI but can nevertheless be useful – think of user defined probing as the type of probing you might use when writing a qualitative discussion guide. For example, we might program inca to ask a question such as, “if you were the CEO of (company X), what one thing would you do to improve this product for customers like yourself?”. This could then be followed up with a user defined probe such as, “What makes you give that such a high priority?”

But to get back to AI probing…

a) Smart probing

Smart probing is where the AI ‘reads’ what the participant says and delivers an appropriate ‘smart’ probe in response. Depending on the nature and depth of the participant’s initial response the type of smart probe will be different.

In this first example, the participant has given a one word answer, as is often the case with online research verbatim questions. In this case we can see that the inca smart probe asks the participant to be more specific in order to elicit more feedback.

In this next example, the participant provides a more interesting answer with a little more detail, yet still a brief response. Here inca picks up on a theme or word from the verbatim to ask “why”.

In this third example of smart probing, the participant provides a good, insightful answer. However, it is still worth probing to see if they have anything else to tell us and inca does this, per the example below.

Finally, if a participant enters gibberish, another fairly common issue with online survey verbatims, inca pulls them up on it and seeks further information.

b) Targeted probing

Targeted probing is part pre-defined probe, part smart probe. The way it works is we set a pre-defined instruction for the AI to use a smart probe if the participant mentions a particular term.

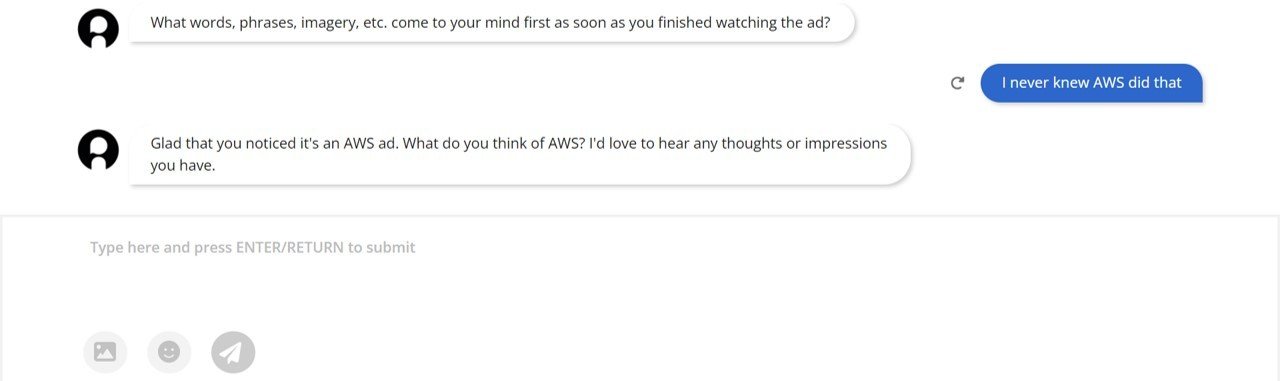

In the example below, inca is seeking feedback on an AWS (Amazon Web Services) ad we tested. For this ad we were particularly interested in probing further if the participant mentioned either AWS or technology, so we set these terms as targeted probes.

The results are illustrated in the two examples below. Note that the second example illustrates the ability of the AI to do “fuzzy” search, that is to pick up the targeted word without the exact spelling (in this case 'tech' instead of 'technology').

Hopefully this blog illustrates how good Conversational AI, such as inca, uses probing to elicit detailed, verbatim response from participants. Clearly this has the potential to generate a lot of rich insight, much more so than the open ended response from traditional online surveys. However, a large volume of open ended, unstructured data brings with it the challenge of how to analyse it all, particularly when project timelines are tight. That will be my topic next time.