Engaging survey questions in Conversational AI

My last two blogs have focused on how conversational AI is used to generate and theme a lot of rich verbatim data. Conversational AI, however, really comes into its own as a Qual x Quant approach. In our opinion, the best case of conversational AI at the moment is to significantly improve the online survey experience for participants and deliver better insight as a result. Therefore, it is important that with Conversational AI we focus not only on verbatims but also on engaging participants through improved versions of typical online quantitative questions. In this blog I'm going to show some examples of how Inca does this through its conversational AI.

There are 3 ways in which we can improve the survey experience with conversational AI:

1. More visually engaging and intuitive questions

2. Gamification

3. Combining Quant and Qual questions

1. More visually engaging and intuitive questions

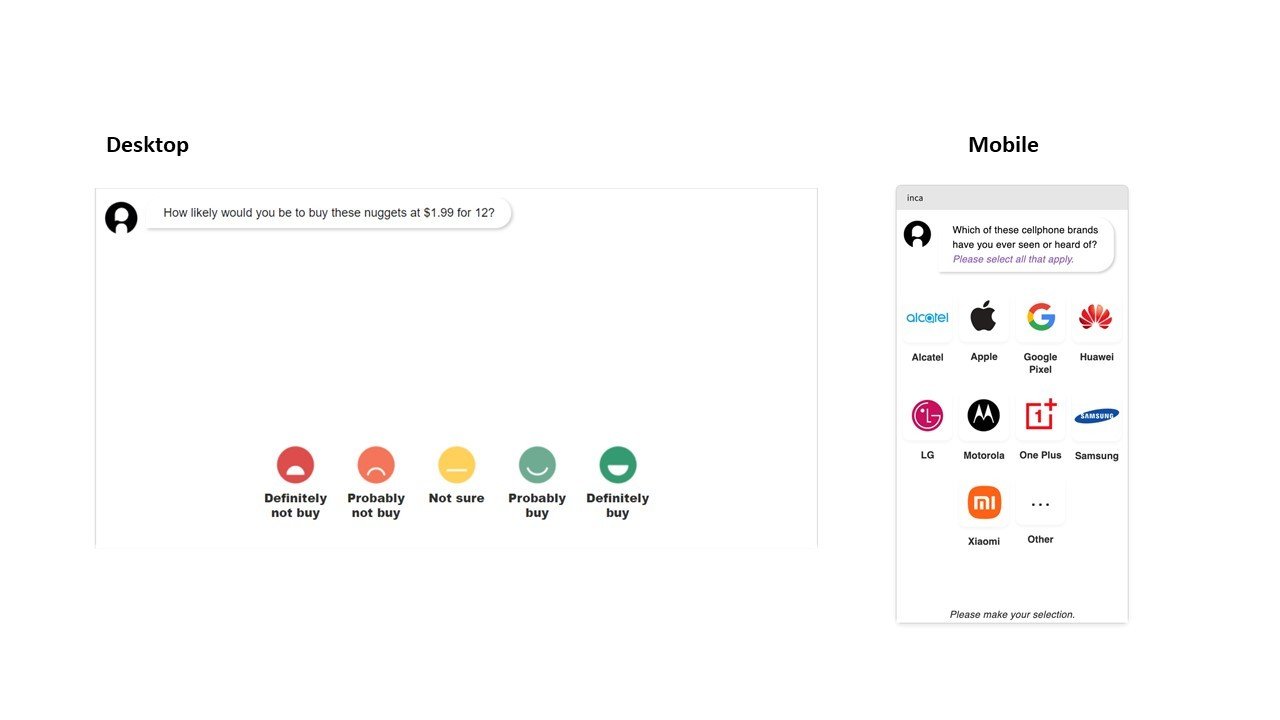

At the most basic level, we make scale questions visual for participants and deliver an appropriate experience for however they are taking the survey, mobile desktop or tablet. This can be seen in the examples below.

Beyond these basic examples, anyone writing a questionnaire is able to easily import appropriate images for the question they are asking. This can be seen in the examples below, firstly by using emojis rather than words to understand how people feel about the topic. Secondly by using photos of famous monuments to engage in a question about countries people would like to visit.

The overall experience is one that is more aligned with how people interact with social media or pretty much anything online these days, leading to a more engaging experience for participants and better data.

2. Gamification

I've previously discussed how Inca uses qualitative projective techniques to elicit deeper, richer verbatim. However, projectives can also be used to gamify the quantitative research experience. One example that we use with Inca is the hot air balloon exercise. Here we ask people to imagine they are in a hot air balloon and they must throw items over the side to make it fly.

This can be a fun way to understand how people would rank a list of items, for example brands or potential features for a new product. We ask participants to get rid of their least favourite item first and then throw other items overboard until we are left with their favourite item and have identified their ranking from worst to best.

3. Combining Quant and Qual questions

Given that Conversational AI at its best, such as inca, is Quant x Qual, many of the questions we ask combine quantified data with open ended feedback. For example, typically for any research that involves showing a stimulus such as a concept, an ad or a video, we ask people to click on elements of the stimulus that stand out to them.

For each element, we then ask them whether they like it, dislike it or find it confusing, providing a quantified read on the most and least motivating elements of the stimulus. Having done that, participants are asked why, enabling us to link these motivating, demotivating or confusing aspects of the stimulus with the verbatim that helps us understand why participants evaluated the stimulus that way.

An example of this can be seen in the screenshots below from the inca dashboard. For example, with the video ad on the left we can see the peaks where most people like or dislike something about the ad. Here the verbatims are shown which explain why that part of the ad was particularly liked or disliked by participants.

Overall, the use of imaginative, engaging quantitative questions together with lots of open ended questions with smart probes leads to a much better experience for participants and richer, more insightful data for researchers.